![https://adoptostaging.blob.core.windows.net/article/tGQtW0BM2EOAWvXXdqP-lA.png]()

Headed towards diversity and inclusion

Diversity, equity and inclusion have become increasingly popular terms in the recruitment industry. With a lot of ongoing talk about prejudices and racism, companies are exploring new ways of conducting business and showing their employees and potential candidates they are serious about diversifying their workplace. However, results of the recent research show that there is ‘’no evidence of firms displaying positive preferences for diversity’’ and that ‘’firms need to take a hard look at their hiring processes and face up the fact that they may not be as diversity-loving in practice as they are in intention.’’ Although multiple studies show that diverse companies perform better, there’s obviously a huge gap between theoretical diversity objectives and the reality of actually accomplishing those diversity and inclusion goals.

Put your personal preferences aside

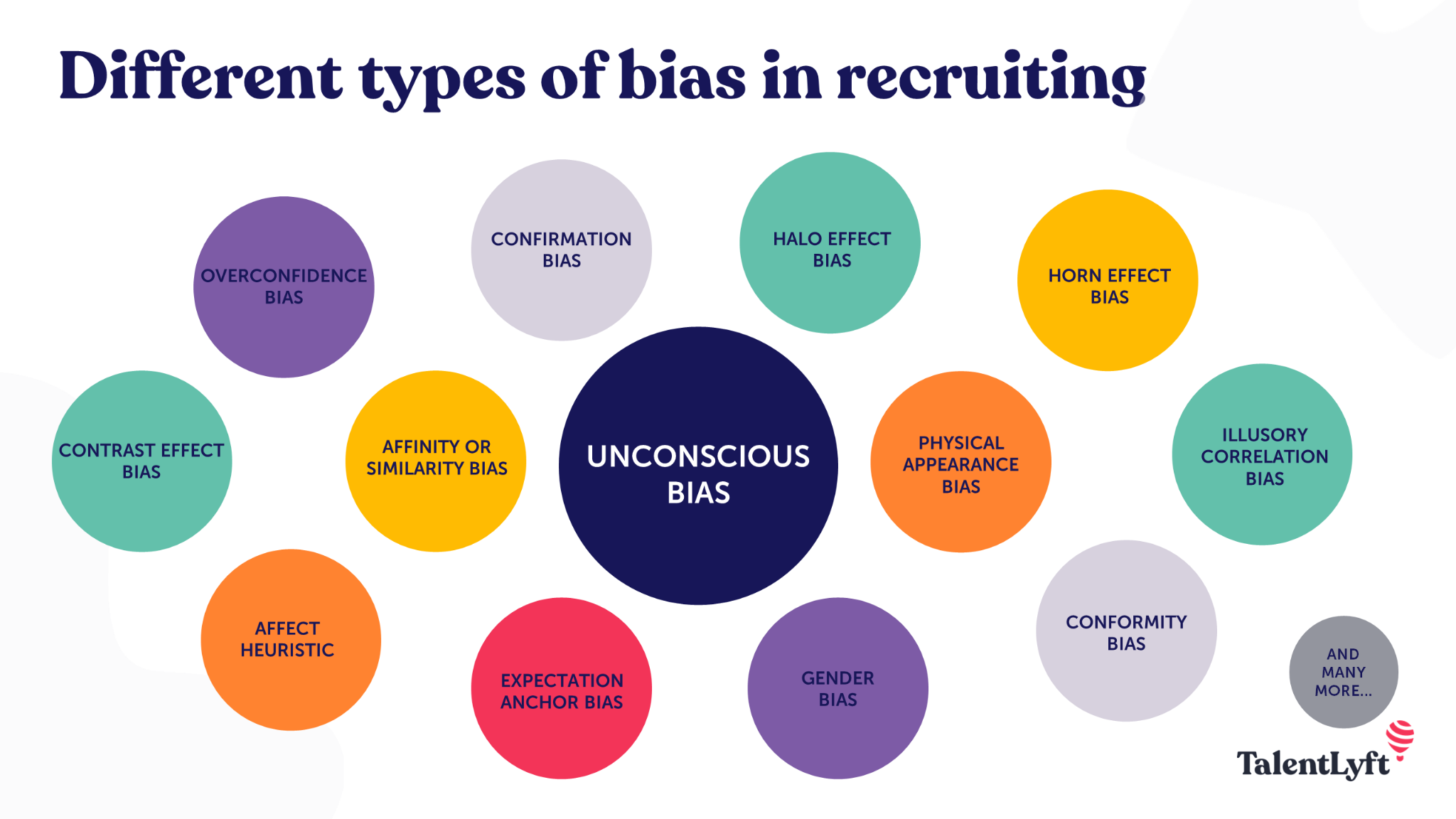

There are 2 main types of bias - conscious bias and unconscious bias. The conscious bias can be somewhat managed, but dealing with unconscious bias can be a little bit tricky. How to control something we’re not aware of?

To give you a better example of how widespread unconscious bias can be and all the different forms it could take in the workplace, we introduce Lisa. Let’s say Lisa applied for a job position at your company. According to the information stated in her CV, you can see you two went to the same high school and looking at her picture, you can assume you’re approximately the same age. Your mind starts to spin all these different questions: have you two met, do you know the same people, etc? You automatically feel a connection and affection towards Lisa because you probably had similar experiences.

In another scenario, you noticed Lisa made several typos in her resume. Now you think to yourself that if Lisa didn’t take the time and effort to double-check her writing, she must be bad at many other things. Although it’s unreasonable to think that a candidate who was bad at checking their resume for typos will also be bad at, let’s say, presenting and selling your products, this minor detail alters your perception of the candidate and puts Lisa at a significant disadvantage.

These two examples of affinity bias and horn effect bias are just some of the most common unconscious and unintentional biases present in the recruiting process. Can you imagine how many more are out there without us even knowing they are present in our decision-making processes?

Unintentional bias is still bias

We’re all susceptible to forming opinions about people based on our own backgrounds and past experiences. Most of the time we do it unconsciously and unconscious bias can easily sneak into our day-to-day decision-making. Even when you try to do the right thing, you can still unconsciously introduce bias. Think about it this way:

Is it wrong to say we’re also being biased while intentionally and knowingly looking for candidates who will fit into our definition of a diversified workforce?

Therefore, exploring and finding out how biases affect our decision-making progress can greatly improve our ability to hire the most qualified candidates for the job.

Systemic bias is deeply rooted in the system

Multiple studies around the world show how personal details such as gender, race and age can affect whether candidates will receive a callback or not. The results of those studies indicate that minority groups tend to be systematically overlooked. Even hearing someone’s name for the first time can trigger emotional responses in our brains and result in unconsciously forming an opinion about a certain candidate.

Perhaps you had a negative prior experience with a person called John and regardless of the quality of your candidate's application, your negative opinion is formed solely on the basis of his name. Or, maybe you have a kid called John, and your instant reaction to this name evokes positive emotions. Those are examples of minor and first instinct emotional responses but the possibilities of cultivating bias are endless, not to mention all the other details that can sway our minds into unfairly evaluating someone’s job abilities.

Unfortunately, centuries of racism and discrimination led to widespread systemic bias and the more we explore the subject, the more we find there’s not a general solution in sight for this problem.

Can anonymized screening entirely reduce bias?

One way to reduce bias is anonymized screening of the candidates. Removing personal information such as name, picture, age and gender at the initial stages of the hiring process can help overcome unfair initial assumptions. We’re all guilty of making assumptions about somebody who we see or meet for the first time. According to a study, 60% of interviewers form an opinion and decide whether the candidate would be a good fit within 15 minutes of meeting them.

Anonymized screening has its benefits, yet there are limitations too. This type of screening doesn’t completely remove bias, it simply delays it to a certain point. You can hide the information on a candidate’s CV, but sooner or later you’ll have to meet them in person and, consciously or not, form your opinion about them. Recruiters want to avoid being biased at any price, but there’s a lot of room for bias to creep in at the next stages of the hiring process. Even if we anonymize applications and remove basic personal information, someone's education and experience can lead us to either favor or overlook them unfairly.

Moments when AI failed

Artificial Intelligence in recruitment has come a long way regarding automation, optimization and its ability to help recruiters find qualified candidates more efficiently than ever before. People also praise AI for its potential to completely remove human bias. But can it though? Only to a certain extent!

Examples of Amazon and HireVue show us that relying on technology to solve our problems with bias is not the most practical way to go. Amazon developed an automated talent search program that was not rating candidates in a gender-neutral way and HireVue used automatic scoring in the shape of face-scanning technology to sort candidates based on their responses. After receiving numerous critiques and raising a lot of questions about the morality and fairness of such candidate assessments, both companies decided to shut down their projects.

Turns out that algorithms picked out biases from their human creators, thus indicating that training the systems on biased data only leads to future biased decisions. Both these cases offer us an insight into the limitations of machine learning.

Illegal or not, it’s a dangerous play

People are more than ever raising concerns about the legal aspects of using AI in assessing job applicants. Equal Opportunity Employer (EEO) laws cover the prohibition of job discrimination questions and answers but with the rapid development of technology and AI solutions, laws on AI-driven assessments are pretty much a grey area and the technology is largely unregulated.

As stated in The New York Times, ‘’under federal law, employers have wide discretion to decide which qualities are a “cultural fit” for their organization. This allows companies to choose hiring criteria that could exclude certain groups of people and to hide this bias through automated hiring.’’

AI is a helping hand, not a magical solution

Technology helps us make decisions based on data and not our instincts, but it’s important to keep in mind that technology is developed and trained by humans. Many recruiters turn to technology for help but are not aware of its limitations. To quote Fortune: ‘’AI offers the promise that there exists some hidden constellation of data, too complex or subtle for HR executives or hiring managers to ever discern, that can predict which candidate will excel at a given role. In theory, the technology offers businesses the prospect of radically expanding the diversity of their candidate pool. In practice, though, critics warn, such software runs a high risk of reinforcing existing biases, making it harder for women, Black people and others from non-traditional backgrounds to get hired.’’

Completely objective talent assessment is something all recruiters strive to achieve but relying on technology alone to solve the issue is a solution with many potential flaws.

Looking for technology that will keep your hiring process human but make it more efficient?

Our carefully tailored automation and AI-based features are designed to make recruiters' day-to-day work easier and leave more time for strategic parts of the job! Get in touch with us to learn more.

Streamline your hiring So, what should you do after all?

Hiring a human is a very human process and human interactions involve emotions. It’s natural that we have our instincts and form our opinions based on those same instincts that helped us develop and get to where we are today. Keep in mind there are a lot of different biases present in our day-to-day life and it’s up to us to learn how to recognize and reduce those biases.

Trying to eliminate all these different types of bias can be overwhelming and feel like going through a minefield. Bias can’t be entirely eliminated but you and the technology, together, have the power to control it. We can start by acknowledging that neither one of us is immune to being biased, whether we like to admit it or not.

![8 Ways Corporate Merchandise Inspires Workers [INFOGRAPHIC INCLUDED]](https://adoptostaging.blob.core.windows.net/article/0plWRulhpE2dFBvZ3XIloQ.png)